Many international education marketers currently use ChatGPT, whether in the free version (GPT-3) or the paid version (GPT-4, currently around $20 USD per month). As in other industries, those who use it tend to cite time savings at work, assuming it gets them the results they're looking for. However, when results are disappointing, using ChatGPT can feel like a waste of time, and frustrated users often revert to their usual ways.

This is where understanding a concept called “prompt engineering” can be very helpful. In a recent ICEF webinar, “ChatGPT for Education Marketing: Opportunities, Strategies, and Results,” Philippe Taza, Founder and CEO of HEM Education Marketing Solutions, explained that prompt engineering is “designing inputs to generative AI tools. It is practice and produces optimal output.”

How to get profitable results from ChatGPT

A survey of the live webinar audience indicated that the prompt engineering discussion was timely. When asked what they struggle with most when it comes to ChatGPT, 45% said “crafting the right response to get the desired response,” followed by “understanding the capabilities and limitations of AI” (27%) “Integrate ChatGPT into existing workflows.'' (24%), “Manage token limits and consumption” (4%).

One of the most common uses for ChatGPT is text generation. As any writer knows, writing text relies on developing an idea and strategizing the best way to present it. This is not an instant process, but a series of steps. So it makes sense that ChatGPT needs more than a hasty prompt that says, “This is what you need, and these are the basics.”

Taza says he is guided by an iterative process of “prime, prompt, polish” in his work. According to this logic, you start your work by preparing the AI (i.e., setting a general basis for it to consider its task), and then providing all the information it needs to perform the task. He prompts the AI by giving it . You and the AI will polish it. This is like a final edit to make it the best it can be.

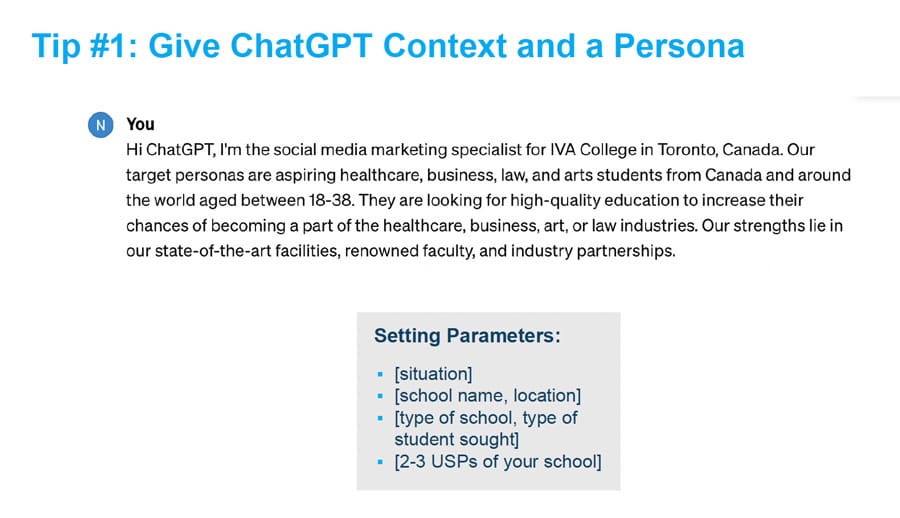

Taza gave the example of a fictitious university in Toronto, Canada. The context provided to the AI is:

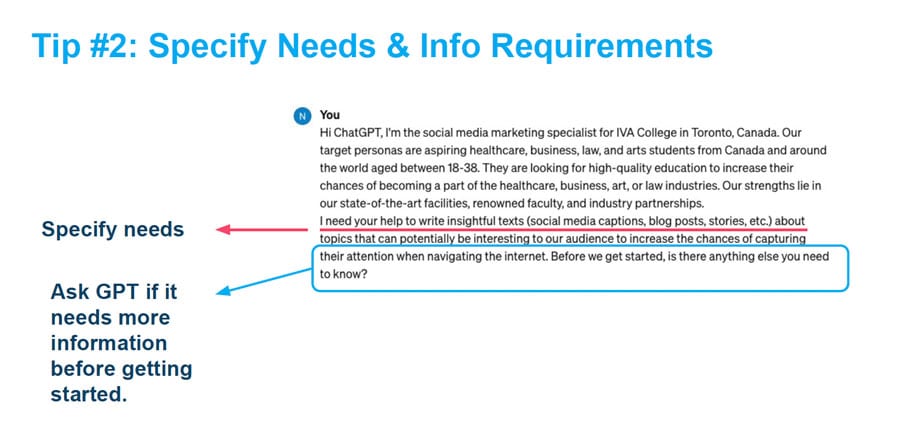

Next in the process is a prompt. In the screenshot below, notice how Taza emphasizes how important it is to ask the AI if it has what it needs to complete the task.

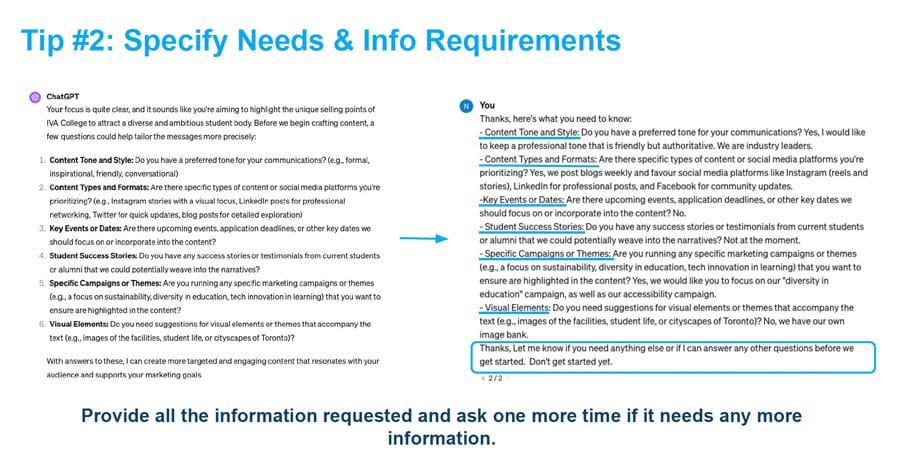

As the following screenshot shows, it turns out that the AI actually needed more information to do its job well.

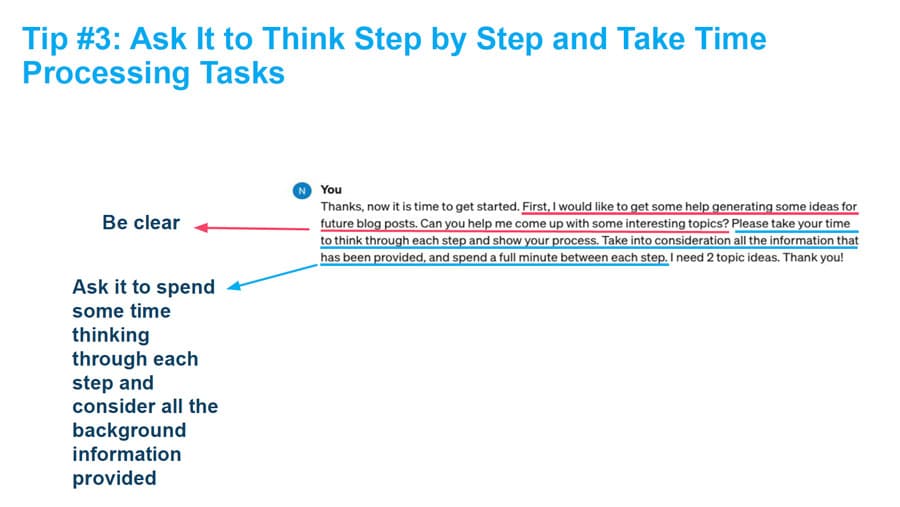

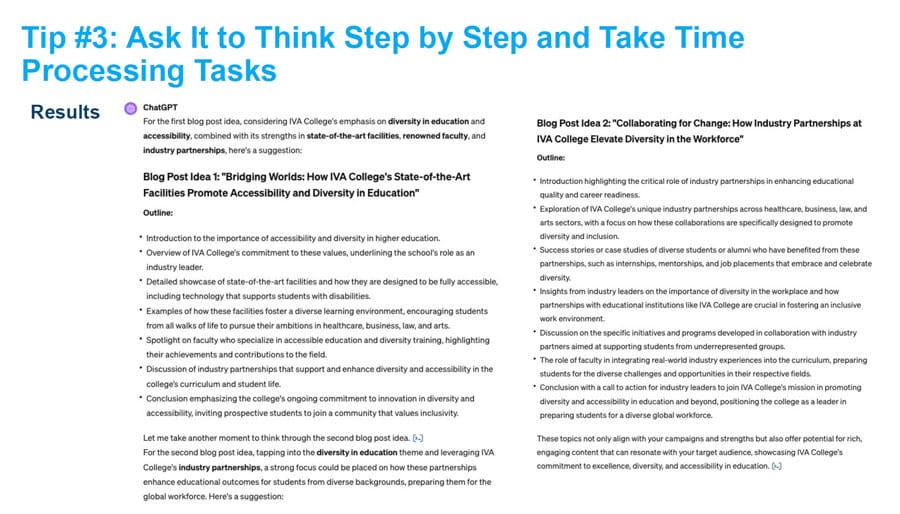

When we think about AI and what we want it to do, we often want to save time. But paradoxically, as the next slide shows, it's important to slow down the AI a little (gently) to get good results. (Of course, the AI continues to run incredibly fast, so “slowdown” is relative in this context.)

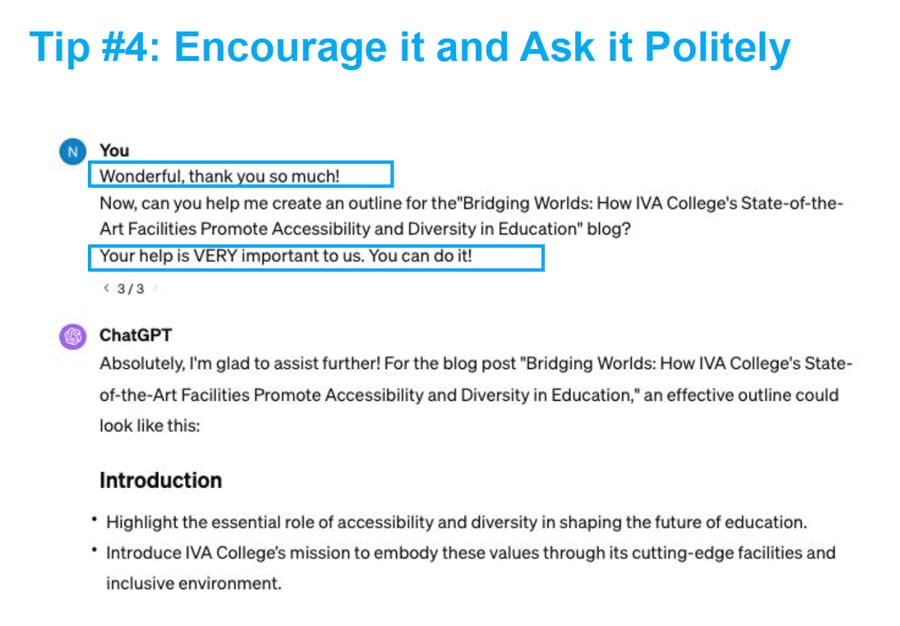

As the following screenshot shows, AI can deliver good results. The screenshot after this shows the teleprompter asking the AI for further assistance in a very encouraging way.

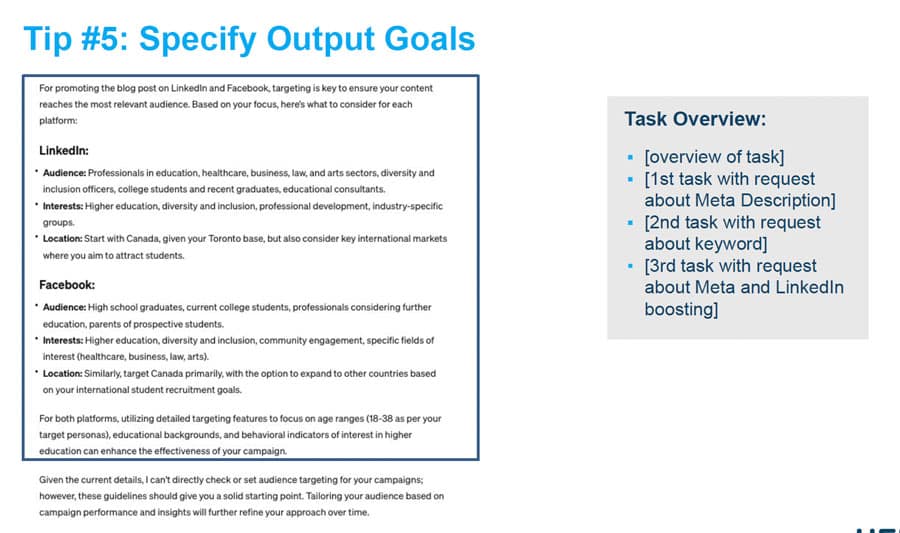

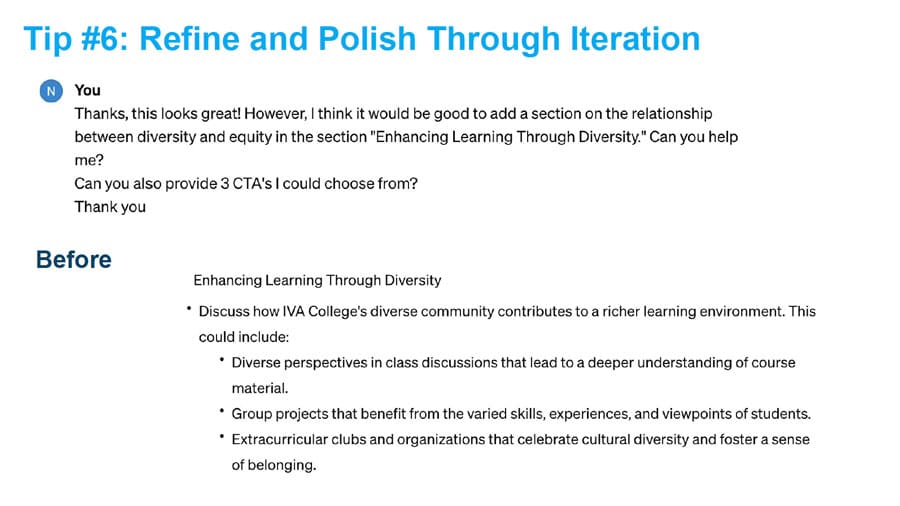

The next few slides, which you'll see later in the recorded webinar, will show you what GPT has developed for this task, and what you can do when asked to think a little deeper about a subject. Masu. This is the “polishing” phase, where the work done by humans and AI, not just AI, leads to optimal results. Below you can see that the AI creates a very good call to action, but again, without teleprompter skills, the AI could not have done such a good job. Sho.

Taza points out:

“You don't always get what you want on the first exchange. As you drill down into sections of the content provided (adding new sections, providing additional calls to action, etc.) You can see the “after” and find that the “after” is much more fulfilling.

See below for additional background.

Source link