Without an efficient way to extract additional computing power from existing infrastructure, organizations are often forced to purchase additional hardware or delay projects. This can lead to longer wait times for results and cause you to lose out to your competitors. This problem is further exacerbated by the rise of AI workloads that require high GPU compute loads.

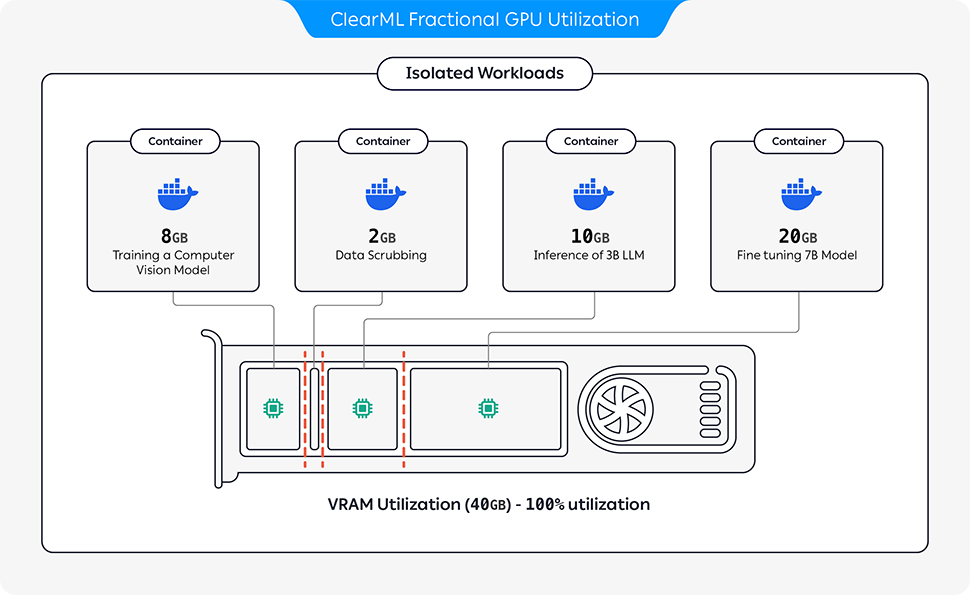

ClearML has come up with what we believe is the perfect solution to this problem: Fractional GPU capabilities for open source users. This allows a single GPU to be “split” to run multiple AI tasks simultaneously.

This move harkens back to the early days of computing, when mainframes were shared among individuals and organizations, allowing them to utilize computing power without purchasing additional hardware.

Fractional capabilities of Nvidia GPUs

According to ClearML, this new feature allows DevOps professionals and AI infrastructure leaders to partition Nvidia GTX, RTX, and data center-grade MIG-enabled GPUs into smaller units to support multiple AI and HPC workloads. , allowing users to switch between small R&D jobs and small R&D jobs. Larger, more demanding training jobs.

This approach supports multi-tenancy and provides secure and confidential computing with hard memory limits. According to ClearML, stakeholders can run isolated, parallel workloads on a single shared computing resource, increasing efficiency and reducing costs.

“Our new free product now supports the broadest range of fractional capabilities on Nvidia GPUs than any other company. ClearML is helping the community build better AI faster at any scale. As part of our commitment to this, we are democratizing access to computing,” said Moses Guttmann. , CEO and Co-Founder of ClearML. “We hope that organizations with mixed infrastructure can use his ClearML to get more out of their existing compute and resources.”

The new open source fractional GPU functionality is available for free on ClearML's GitHub page.